Generating the Future: A Comparison of GANs and Transformers

Generative Artificial Intelligence (AI) has gained immense popularity in recent years due to its ability to create new and diverse content, such as images, music, and text. Two key architectures of generative AI that have made significant contributions to this field are Generative Adversarial Networks (GANs) and Transformers.

Fundamentals of Generative Adversarial Networks (GANs)

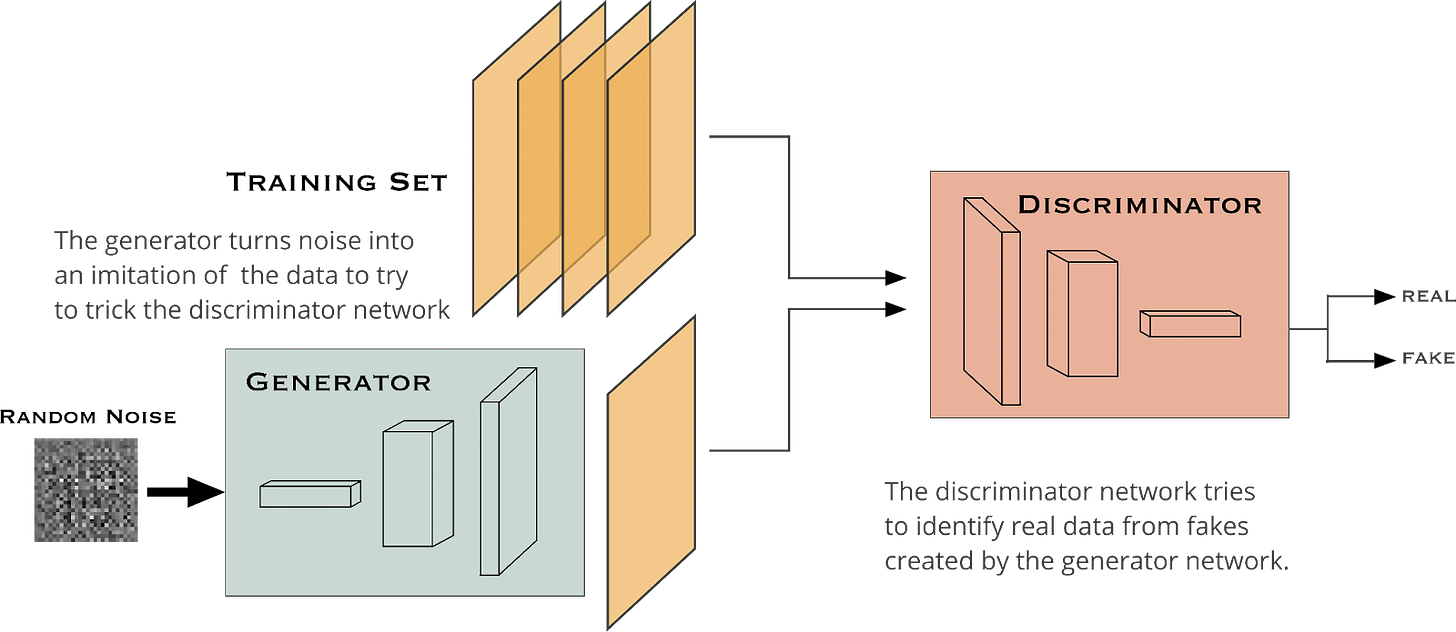

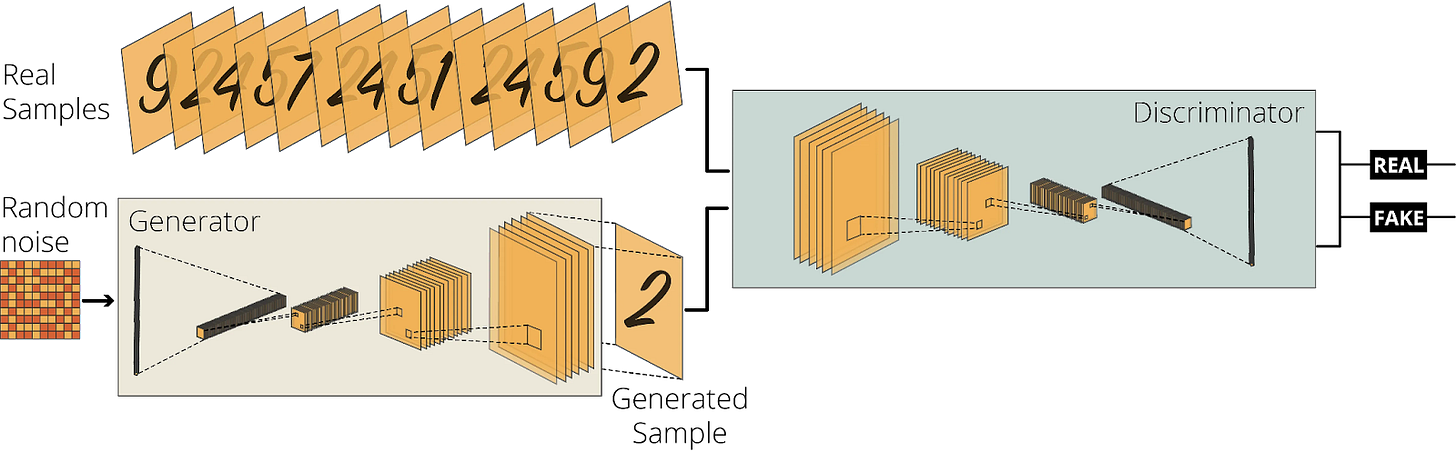

GANs were first introduced by Ian Goodfellow in 2014, and they have since become one of the most popular generative AI architectures. GANs consist of two neural networks, a generator and a discriminator, that are trained to work against each other. The generator takes in a random noise vector as input and generates a new output, while the discriminator evaluates the output and tries to distinguish it from real data. The generator learns to improve its output by fooling the discriminator, and the discriminator learns to improve its accuracy by correctly identifying real data. GANs have been used to generate high-quality images, videos, and even music.

Given a training set, this technique learns to generate new data with the same statistics as the training set. For example, a GAN trained on photographs can generate new photographs that look at least superficially authentic to human observers, having many realistic characteristics. The core idea of a GAN is based on the "indirect" training through the discriminator, another neural network that can tell how "realistic" the input seems, which itself is also being updated dynamically. This means that the generator is not trained to minimize the distance to a specific image, but rather to fool the discriminator. This enables the model to learn in an unsupervised manner. GANs are similar to mimicry in evolutionary biology, with an evolutionary arms race between both networks.

Mimesis in Ctenomorphodes chronus, camouflaged as a eucalyptus twig

GANs operate on the principle of pitting two networks against each other in a zero-sum game similar to tug-of-war. One network, known as the discriminator, learns to differentiate between real and fake samples, while the other network, the generator, attempts to produce fake samples that are indistinguishable from real ones to fool the discriminator. The generator generates fake samples by forwarding a latent seed, which can be any arbitrary data or an array of random numbers. The challenge in GANs is to strike a balance between the two networks. If the discriminator performs poorly, the generator will not learn anything, and vice versa. Furthermore, GAN losses are artificial and constantly changing, making it difficult to determine whether the generator is performing well or not. Therefore, identifying good metrics to monitor GAN training and assess generated samples remains an open problem that heavily relies on the application.

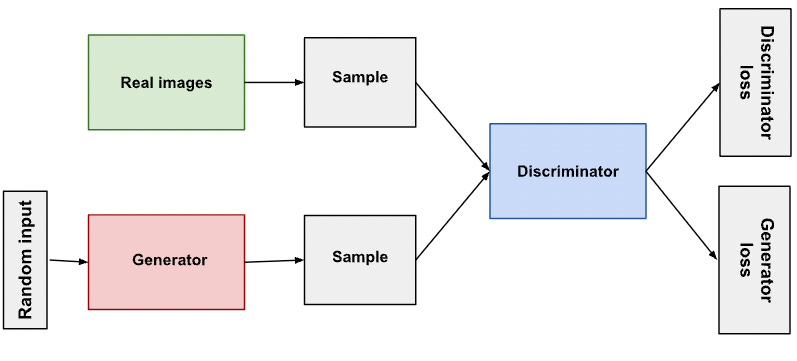

The adversarial training procedure of this pair of networks and losses consists of iteratively looping over the following steps after a random (or pretrained) initialization of the two networks:

Discriminator update:

Sample a batch of m real data

Sample m latent seeds, and generate m fakes

Update the discriminator to minimize "LossReal" and "LossFake"

Generator update:

Sample m latent seeds, and generate m fakes

Update the generator to maximize "LossFake"

The generator and the discriminator are never updated at the same time, which is actually crucial for good convergence behavior.

The following visuals depict how the discriminator and the generator are applied:

Fundamentals of Transformers

Transformers, on the other hand, were introduced by Vaswani et al. in 2017 and were initially developed for natural language processing tasks. Transformers are based on the self-attention mechanism, which allows the model to pay attention to different parts of the input sequence when generating the output. Unlike traditional recurrent neural networks (RNNs) that process input sequences sequentially, transformers can process the entire input sequence in parallel, making them more efficient for long sequences. Transformers have been used for a variety of tasks, such as language translation, text generation, and image captioning.

The Transformer - Model Architecture

Self-attention Example

Language heavily relies on context. For example, look at this sample:

Second Law of Robotics

A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

In the example, we have highlighted three places in the sentence where the words are referring to other words. There is no way to understand or process these words without incorporating the context they are referring to. When a model processes this sentence, it has to be able to know that:

it refers to the robot

such orders refers to the earlier part of the law, namely “the orders given it by human beings”

The First Law refers to the entire First Law

Self-attention is a technique that allows a model to understand the context of a particular word before processing it through a neural network. It achieves this by assigning relevance scores to each word in a segment and summing up their vector representations. A value matrix generates value vectors for all the words in the sentence that hold contextual information. Next, the system calculates similarity scores using query and key vectors, and computes a weighted sum of the value vectors. The weights for each value vector are determined by the similarity scores, ensuring that the final contextual representation is influenced more by relevant words.

For instance, when the word "it" is being processed, the self-attention layer in the top block focuses on "a robot" to provide a vector representation that is the sum of the vectors for each of the three words multiplied by their scores.

Key Differences

While both GANs and Transformers are generative AI architectures, they differ in their approach to generating new content. GANs focus on generating content by learning from real data and trying to replicate it, while Transformers focus on generating new content by understanding the relationships between different elements of the input sequence. GANs are particularly good at generating high-quality images and videos, while Transformers excel at natural language processing tasks.

Another key difference between GANs and Transformers is their training process. GANs require careful tuning of hyperparameters and can be difficult to train due to their two-network structure. On the other hand, Transformers are relatively easier to train and require less parameter tuning.

In conclusion, GANs and Transformers are two key architectures of generative AI that have made significant contributions to the field of artificial intelligence. While both are effective at generating new content, they differ in their approach and application.

Both architectures have their strengths and weaknesses, and their continued development and refinement will lead to even more impressive applications of generative AI in the future.

Recommended references and resources for Generative AI Architectures:

https://blog.wolfram.com/2020/08/18/generative-adversarial-networks-gans-in-the-wolfram-language/

https://papers.nips.cc/paper_files/paper/2014/hash/5ca3e9b122f61f8f06494c97b1afccf3-Abstract.html

https://chat.openai.com/

helped me edit the article